Bullet-Time Effect

With dynamic NeRF you can create fun visual effects like in this scene from "Matrix". In the movie they used over 100 cameras to capture a scene that can create with one.

Current techniques for novel view synthesis using neural radiance fields (NeRF) in non-rigid 3D scenes produce a scene representation that matches the ground truth with a noticeable error.

Our approach DGD-NeRF reduces this error by improving and extending existing dynamic NeRF by depth using an RGB-D dataset. We constrain the optimization by depth supervision and depth-guided sampling. Our method outperforms current techniques in rendering novel views of the learned scene representation.

We built a custom RGB-D dataset of challenging scenes. We used an iPad to record RGB-D videos and the corresponding camera poses.

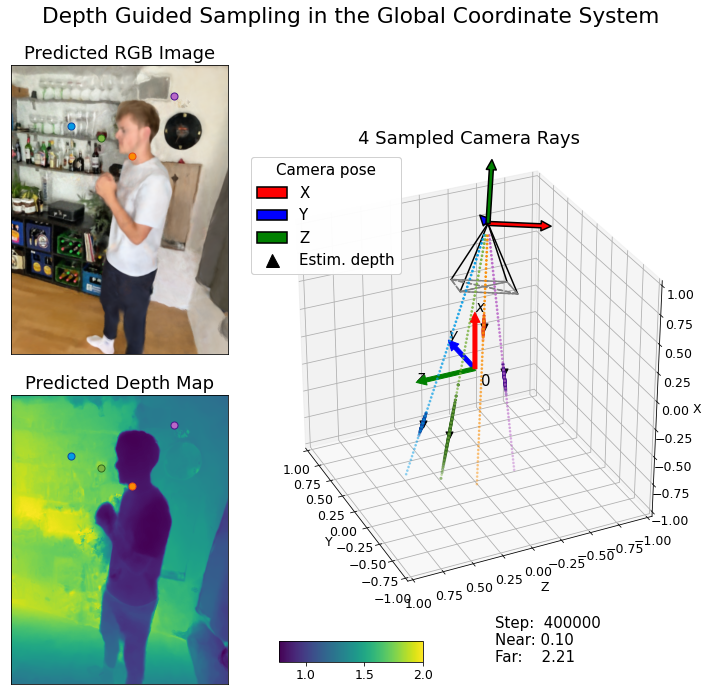

Depth guided sampling ensures that most samples in spatial locations near the first surface in the direction of the ray.

With dynamic NeRF you can create fun visual effects like in this scene from "Matrix". In the movie they used over 100 cameras to capture a scene that can create with one.

Using DGD-NeRF, you can re-render a video from a novel viewpoint such as a stabilized camera by playing back the training deformations.

@article{kirmayr2022dgdnerf,

author = {Kirmayr, Johannes and Wulff, Philipp},

title = {Dynamic NeRF on RGB-D Data},

year = {2022},

month = {Jul},

url = {https://philippwulff.github.io/DGD-NeRF/}

}